Why Using Amazon Bedrock in Your Mendix Apps is a Must

Creating fast, intuitive, smart applications is a necessity. While the landscape of options for creating them may become clearer by the day, finding the right tools can still feel like climbing a mountain.

In this blog post, we will share our team’s personal experience with the Amazon Bedrock service and shed some light on why using this service within your applications is a must.

Mendix and AI

Mendix has made a dynamic intro into AI-enhanced app development for enterprise applications. Our platform allows for delivering smart, intuitive, and personalized apps with AI capabilities.

In our journey to find the best for Mendix app developers, we have managed to bring together the most intuitive AI services from AWS to help make smart enterprise application development feel like a walk in the park.

You can follow our journey through:

- The Mendix Community YouTube channel

- The developer blog

- And you can check out the currently available Mendix AWS connectors

- But if you want to learn how to build your own connector, you should follow this Academy learning path.

As a team, our purpose is to be able to provide the tools developers will find valuable in their development practice. We also spend time investigating and highlighting dynamic and easy-to-use smart features.

Recently, we explored a newly released service from AWS – Amazon Bedrock – from which we created a Mendix Amazon Bedrock connector and an example implementation.

What is Amazon Bedrock?

Amazon Bedrock is a fully managed service that makes foundation models (FMs) from Amazon plus leading AI startups available through an API.

Through this, you can choose from various FMs to find the model that’s best suited for your use case. It promises a quick start to a serverless experience with foundational models.

What are Foundation models?

The first definition of foundation models was given by Stanford University. It describes them (e.g., BERT, GPT-3, Claude, Amazon Titan) as models trained on broad data at a scale that can be adapted to a wide range of downstream tasks.

Because we were able to gain early access to Amazon Bedrock, we found the latest versions of the following foundation models available:

Text

- Amazon Titan Large v.1.01

- AI21 Labs Jurassic-2 Ultra

- Anthropic Claude 2

Image

- Stability AI Stable Diffusion XL v2.2.2

To make things more exciting for our team while learning about this service, we decided to hold a hackathon day: “Us against Amazon Bedrock.”

It was astonishing to witness how swiftly we made progress in a trial app. Within 15 minutes, we had successfully integrated and retrieved a list of all the models available in Amazon Bedrock.

Even more impressive, in just under an hour, we were already experimenting with Amazon Titan and producing images using Stability AI’s Stable Diffusion XL.

Later in this post we will share the personal experiences of various team members – both the struggles and the exciting moments – who implemented the foundational models within the test application.

Stable Diffusion XL

In the world of generative AI, Stability AI is a company to keep on the radar. The open-source company launched its AI initiative in 2021, and now they count a community with more than 200,000 creators, developers, and researchers.

Stability AI uses the powerful Ezra-1 UltraCluster supercomputer to create the Stable Diffusion XL models, giving users a unique experience.

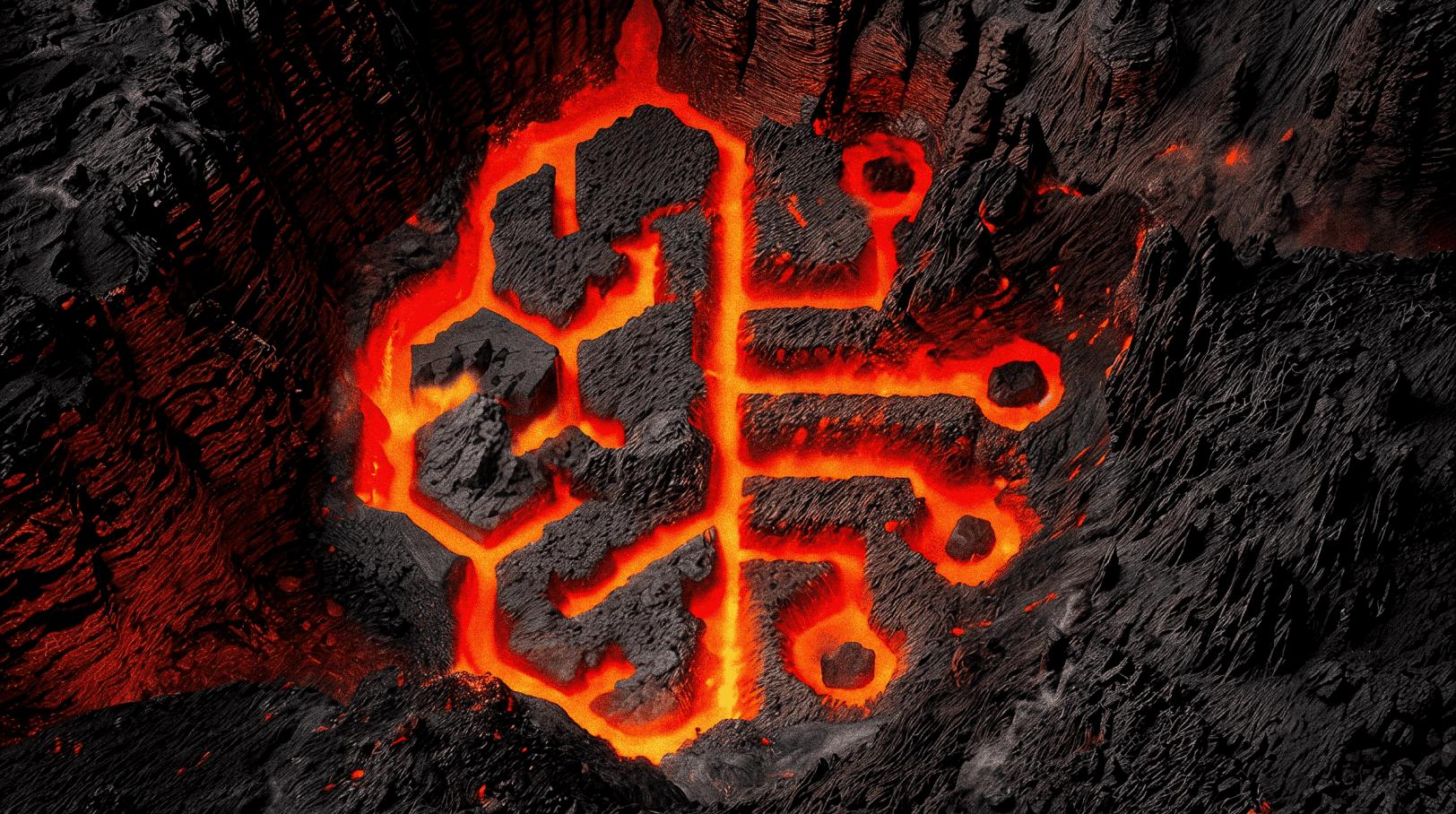

Stable Diffusion XL 2.0. was released in November 2022, but Stable Diffusion XL is still only publicly available as a beta version. Stable Diffusion XL is a text-to-image AI. This means you can create descriptive images with shorter prompts and generate words within images. To illustrate, all the pictures in this blog were created with Stable Diffusion XL.

Agapi Karafoulidou on implementing Stable Diffusion XL

“During the ‘Us against Amazon Bedrock’ hackathon, I rushed into the Stable Diffusion XL implementation. My initial thoughts were a bit pessimistic, but that did not stop me.

“At the Amazon Bedrock test page, I was able to find the API request in a JSON format. Once I had that at hand, I easily created the JSON structure within the Mendix app and then created an export mapping.

“After that, a microflow with a POST API call was added, and, of course, I had to add the necessary headers. Thankfully, they were visible in the API request. I ran the app, added the prompt ‘give me the perfect picture’ and clicked.”

This was the result:

Amazon Titan

Amazon Titan is a generative large language model for text generation tasks like creating a blog post. In case you are wondering after reading that last sentence, the summary portion of this blog was created with the help of AI, but the rest of it was not. 🙂

Amazon Titan is, of course, trained with many datasets that put it in the general-purpose category of models. Find out more about Amazon Titan.

Daan Van Otterloo on the implementation of Amazon Titan

“As a new Mendix AWS team member, I was excited and happy to start my work with the Amazon Bedrock connector. Generative AI has made some awesome leaps in the last few years and has become a hot topic since the release of ChatGPT. This makes it possible for Mendix developers to easily integrate these models into their applications. It will add value to so many applications in so many ways.

“Personally, I had the pleasure to develop part of the example implementation of the Amazon Bedrock connector. As such, I got to play around with most of the models, especially the Amazon Titan Text Large model.

“This is a general-purpose generative LLM (Large Language Model) that can help users with things like:

- Text generation

- Code generation

- And idea brainstorming, among other use cases

“My favorite way to use models like Amazon Titan is for inspiration, such as asking the model for recipes, ideas for presents, or simply asking it for movie or music recommendations.

“We decided to connect to the Amazon Bedrock service using REST API calls. Here’s how I did that:

- To get started, I logged into my AWS account and navigated to the Amazon Bedrock playground.

- I selected the Amazon Titan Text Large model and started playing around. (On this page, you will also find a request JSON example that can be copied and used in Mendix for creating the export mapping that will be used for the REST call invoking Amazon Titan. )

- In Mendix, I used the debugger to check and copy the response so that I could create the data model and microflow logic used for importing the response.

“This is all I had to do to be able to utilize the model, and now it will be up to our creative Mendix community to generate value using these models!”

Jurassic-2 Ultra

AI21 Labs, an Israel-based company, was founded in 2018 with the mission to build AI systems with the ability to understand and create natural language. Their products include the foundational model Jurassic-2 Ultra.

According to AI21 Labs’ documentation, with the Jurassic-2 models, you can:

‘’…generate a text completion for a given text prompt by using our Python SDK or posting an HTTP request to the complete endpoint corresponding to the desired language model to use. The request contains the input text, called a prompt and various parameters controlling the generation. For authentication, you must include your API key in the request headers. A complete response contains the tokenized prompt, the generated text(s), called completion(s), and various metadata.’’

Nicolas Kunz Vega on the implementation of Jurassic-2 Ultra

“Unlike their name may suggest, the Jurassic-2 Ultra foundational models have nothing to do with the prehistoric era but are instead cutting-edge LLMs. What really amazed me while working with the Jurassic-2 Ultra models was the number of ways the requests could be tweaked to manipulate the response returned by the model.

“Next to the more common input parameters found in various models available on Amazon Bedrock, like the maximum number of tokens to return or the temperature that controls how creative (or repetitive) the model gets with the responses, Jurassic-2 Ultra provides some advanced inputs.

“For example, there is the option to penalize the use of tokens based on:

- Their presence on the given prompt

- The frequency they are used, and

- Their count in the generated responses

“This can also be expanded to also consider whitespaces, emojis, numbers, and more. This variety of inputs helped me to get a better understanding of what influences the model when generating a response to a given prompt. It also helped in demystifying the black box that generative AI is to most of us.

“When it came to implementing the response, I found that the Jurassic-2 Ultra models returned a more detailed response than other models I had seen on Amazon Bedrock so far. Next to the generated text and some metadata, it will also provide you with every generated token and its probability scores. It’s also possible to get the best alternatives to the chosen prompts, which, I guess, can be seen as the “AI thought process” the model goes through when generating the output.

“All in all, it was a fun, hands-on experience implementing the Jurassic-2 Ultra models in Mendix and especially playing around with building all kinds of requests and seeing the influence they have on the responses that get sent to the Mendix app!”

Claude 2

Anthropic is a company with the mission to “ensure that transformative AI will help people and society to flourish.” The San Francisco-based company was founded in 2021 by former OpenAI members with the vision to build systems that people can rely on.

Anthropic’s product Claude 2 was designed to reduce brand risk (Constitutional AI is built in). It can handle large amounts of content and can be personalized per use case. Find out more about it here.

Casper Spronk on implementing Anthropic Claude 2

“Implementing Anthropic Claude 2 was quite a breeze. With AWS already providing the correct syntax for all three models (and them being the same except for the modeled field), the models could be implemented using the same request and response structure.

“By adding a version enumeration on the request and then mapping this to the corresponding model ID string, a single microflow can be used for all three models. It is then up to the developer implementing the model to choose if they want to allow the end-user to select a model or if the model will always be the same.

- The body of the request can be mapped with a simple export mapping.

- Then, set the output of the export mapping as the body of the generic request.

- After calling the Invoke Model Generic microflow action, the import mapping is performed on the response body attribute.

- The completion attribute on the InvokeModelResponseAthropicClaude entity will then contain the response that Claude 2 has generated.

“If you want to have a full conversation with Claude 2, the developer should add the “Human:” tag before all (previous) human inputs and the “Assistant:” tag for all previous outputs generated by Claude 2.

“If you want to ensure that the model doesn’t return anything past a certain phrase, you can add a stop sequence to the request. If the model would have generated that word or phrase, the model will instead return everything that it has generated up to that point.”

Casper Spronk on calling Amazon Bedrock through lambda

“During the Amazon Bedrock hackathon, one of our objectives was to test if we could call Amazon Bedrock through an AWS Lambda function as well. This posed some challenges, as Amazon Bedrock was not yet available through AWS Lambda by default. We had to figure out what packages were required to call Amazon Bedrock, or AWS functionality in general, with Python.

“To access AWS with Python, you are required to have at least two packages, boto3 and botocore. Usually, when calling packages, you would have to upload a layer and add that to your lambda function. However, the issues we ran into were that:

- The boto3 and botocore packages were already available as layers in a lambda function, and

- These default layers would be called prior to the custom layers that we would add

“After much debugging and frustration, we decided to upload the entire working Python function together with all its dependencies as a lambda function in the hope that this would get the latest versions of boto3 and botocore called instead of the AWS default boto packages. This ended up working and gave us a working lambda function!”

Summary (AI)

As mentioned in the beginning, we asked the AI models to give us a summary of this post:

Stable Diffusion XL v2.2.2

Jurassic-2 Ultra

The Amazon Bedrock is a fully managed service that provides the latest foundation models through an API, making it faster and easier for developers to use these models in their applications. As a team, we were excited to get early access to Amazon Bedrock and were impressed with how quickly we were able to integrate and retrieve a list of all the models available.

We held a hackathon day called “Us against Amazon Bedrock” where we experimented with the different models available, including Stable Diffusion XL, Amazon Titan, Jurassic-2 Ultra and Anthropic Claude 2. We were impressed with the results we were able to achieve in a short amount of time and believe that Amazon Bedrock is a valuable tool for Mendix developers looking to create smart, intuitive applications.

In conclusion, Amazon Bedrock is a powerful tool for Mendix developers looking to create smart, intuitive applications with AI capabilities. We found it to be fast and easy to use, and believe it is a valuable addition to the Mendix ecosystem.

Claude 2

Here is a summary of the key points from the blog post:

- The post discusses using Amazon Bedrock, a new service that provides access to foundation models through an API, to quickly build AI-powered apps with Mendix.

- The team held a “Us against Amazon Bedrock” hackathon to test out the service. They were able to integrate and get results from models like Stable Diffusion XL for image generation and Amazon Titan for text generation within an hour.

- Team members share their experiences implementing different foundation models like Stable Diffusion XL, Amazon Titan, Jurassic-2 Ultra, and Anthropic Claude 2 in Mendix and creating proofs of concept.

- They found the service easy to use with clear documentation. The JSON-based APIs could be easily integrated in Mendix using export mappings.

- One challenge was calling Amazon Bedrock from AWS Lambda, but they managed to get it working by bundling all dependencies into the Lambda function.

- Overall, Amazon Bedrock seems like a promising service to help Mendix developers quickly build smart, AI-powered apps by leveraging powerful foundation models. The post provides a hands-on look at how to get started with it.

Amazon Titan Large v1.01

Summary:

Amazon Bedrock is a fully managed service from AWS that makes foundation models from Amazon and leading AI startups available through an API. It promises a quick start to a serverless experience with Foundational models. The team at Mendix has brought together the most intuitive AI services from AWS to help make the smart enterprise application development feel like a walk in the park. The team held a hackathon day to learn about the service, and in just 15 minutes, they successfully integrated and retrieved a list of all the models available in Amazon Bedrock. In just under an hour, they were experimenting with Amazon Titan and producing images using Stability AI’s Stable Diffusion XL. The team members shared their personal experiences of implementing the foundational models within a test application, including the struggles and the exciting moments.

Authors

Agapi Karafoulidou, Casper Spronk, Daan Van Otterloo, Nicolas Kunz Vega