This blog accompanies the Quality Assurance Expert Webinar which can be viewed here. It was written by Clyde Waal, Expert Services Consultant.

In our recent webinar, we provided an introduction to the mindset required to achieve successful quality assurance within your Mendix project. This blog post offers more in-depth information and external references that will help you adopt successful quality assurance practices in your projects.

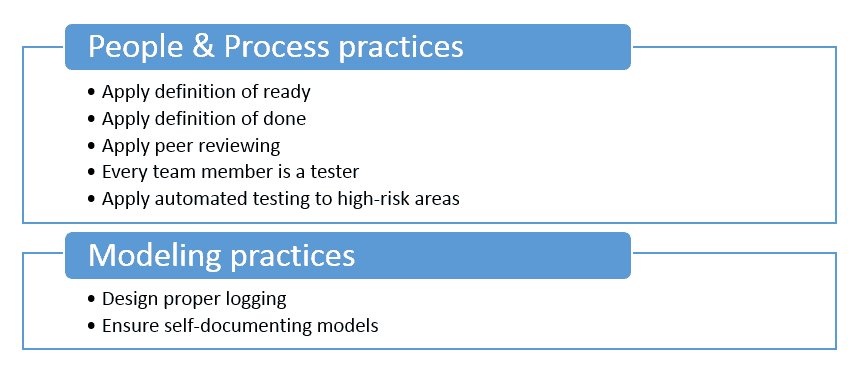

Lightweight quality assurance best practices

One of the key success factors for effective quality assurance is the ability to embed quality-enhancing practices into your projects, without burdening your team with extensive additional workload. We have identified 8 quality assurance best practices that will help you achieve this goal:

Best Practice 1. Apply definition of ready

The Definition of ready is a set of criteria that should be met before a user story is ready to be developed. Recommended definition of ready criteria are at least:

- Acceptance criteria have been defined.

- Dependencies with other stories have been identified.

- The story has been estimated by the team.

- The team has a good idea of what it will mean to demo the story.

Ensure that your team will only place stories on the sprint backlog that fulfill the mutually agreed upon definition of ready.

Best Practice 2. Apply definition of done

A Definition of done is a checklist of criteria that must be met before a Product increment can be considered “done.” The team should have a shared understanding of this definition. Recommended Definition of done criteria are at least:

- The story has been tested in a matter appropriate to the risk profile.

- The story has been modeled in a way that is either self-explanatory or sufficiently annotated to ensure future understanding.

- Model changes have been committed to the Team Server.

Recommended practice is to ensure that the definition of done is displayed on a wall of the team room, so that team members can refer to it easily.

Best Practice 3. Apply peer reviewing

All developed work should be seen by at least two pairs of eyes as verifying work reduces the amount of bugs introduced. Peer reviewing can be applied sequentially (one team member reviews the workload of another member at appropriate intervals) or concurrently. In the latter case, developers are actually working alongside each other. This practice, known as pair programming, should only be considered for extremely high-risk areas of your application.

Best Practice 4. Every team member is a tester

Another quality assurance best practice is to remember that every team member is a test. In fact, every team member is responsible for delivering a tested product. In traditional waterfall environments, testing is often left to designated team members or even specialized departments which execute testing after development phases are finished. The resulting time lag in feedback increases the chance of expensive rework and waste. Furthermore, it increases the risk of team members working in opposition with each other due to conflicting targets and differing reporting lines. To prevent this, you need to make sure that delivering tested quality work is a shared team goal that every team member contributes to.

Best Practice 5. Apply automated testing to high-risk areas

As your application evolves, the amount of needed regression testing increases. To keep your regression workload manageable, it is important to develop a feeling for which high-risk test cases should be automated. It should never be necessary to automate all test cases, so build on the interpretation of high-risk areas you developed as a team and focus any automation in those areas. A useful tool to elicit these risks is using risk poker during your sprint planning meetings.

For examples on tooling which can be utilized to do automated testing, refer to the webinar and how-to’s at the end of this blog post.

Best Practice 6. Design proper logging

Proper logging helps isolate problems in production applications; it also helps maintain quality during development. Implementing appropriate logging forces you to think about where your application might behave unexpectedly, and will force you to think about opportunities to improve the robustness of your application. Please refer to the Error-Handling Expert Webinar for detailed tips on how to properly handle errors and logging.

Best Practice 7. Ensure self-documenting models

The Mendix Business Modeler provides all the tools you need to make sure that your application models are self-documenting. Selecting caption texts on your activities carefully, and making use of annotations where needed, can go a long way towards making sure that your models can be easily understood. This will not only help your initial quality assurance efforts, but will also make sure that any needed rework will be easier to execute in the future.

By adopting the above quality assurance best practices where appropriate, you can embed quality assurance into your Mendix projects, while maintaining the benefits Mendix offers in terms of speed and agility.

3 ways Mendix makes your testing life easier

Because Mendix application functionality is defined at a higher abstraction level compared to most other software development platforms and languages, the platform reduces the likelihood of defects and the required testing effort by performing many automated checks. This becomes apparent when you keep in mind the following three ways in which Mendix makes your testing life easier:

Enforcement of consistency within your application model

The Business Modeler continuously monitors whether the Microflow activities and page elements you define are consistent with the domain model. The Modeler will throw an error and prevent deployment of an application that does not pass the consistency checks.

Examples:

- The Modeler will prevent deployment of an application that has an input field connected to a wrongly typed attribute, or to an attribute that no longer exists. For example, a TextBox connected to a Boolean attribute.

- The Mendix platform will automatically hide navigation menu options from the user that lead to pages for which the current user does not have sufficient rights.

- Similarly, the Modeler will throw an error in case a user of a given role has the possibility to navigate to a page which displays entities and/or attributes that are inaccessible to users of that role due to security settings.

- When defining data retrievals in your application, the Mendix platform validates whether the resulting database queries are both syntactically and semantically correct and will execute successfully against the database.

Test implications:

The above consistency checks can point out or prevent defects that could easily slip through in non-Mendix development efforts, resulting in less defects to deal with. In some instances, it also works proactively: when you change the name of an attribute, the Modeler will also adjust all references to this attribute. This again reduces the chances of defects slipping into your model.

Consistent deployment of your application model

When an application is started, the Mendix platform will ensure that the underlying database structure matches the domain model that is defined within your application model. Since consistency within your domain model is also guaranteed (see point 1), the database structure that will be deployed is consistent with all data usage of your application.

Example:

A TextBox in your application not only has a corresponding String attribute in the data model, but also a corresponding String (-equivalent) field in the underlying SQL database.

Testing implications:

It is not necessary to test whether data committed in a Mendix application actually ends up correctly in the underlying SQL database.

Standard Mendix components are tested by Mendix

If you envision Mendix as a toolkit with which you build your own apps, you can rest assured that your tools will work as advertised. Our internal quality assurance team rigorously tests whether standard Mendix components work as intended, and continue to do so across platform releases.

Example:

A TextBox connected to a String attribute of a certain maximum length will never accept a value that exceeds this length.

Test implications:

Ensure that your testing is scoped towards your own logic, not validating Mendix components. Do not test the toolset, but the house you have chosen to build with it.

While there are many more ways in which the platform reduces the likelihood of defects and costly mistakes, these three major categories of checks should help you get a better understanding of what to test and what not to test.